LLMs are already adding value to trading teams.

LLMs are already adding value to trading teams.

The advent of Open AI’s Chat Generative Pre-trained Transformer (ChatGPT) tool has generated as many column inches as it has AI-poetry. Its potential, well hyped by both users and the media, is to answer natural language questions – including contextual points – with natural language answers, based on massive data sets. While ChatGPT bases its answers on historical publicly available information on the internet from a year ago and over, other GPT tools have been trained on more current, and specific data, sets.

In capital markets many technology providers have begun developing artificial intelligence (AI) systems using large language models (LLMs), which enable the learning of rules around syntax and semantics in order to effectively provide natural language processing (NLP) search capabilities on massive sets of structured or unstructured information. Anju Kambadur, Bloomberg’s head of AI engineering, says, “For structured data, access to market data and analytics has historically required expertise in programming computer systems and learning the relevant query languages and APIs. LLMs, on the other hand, offer easy access to such structured data by translating queries in natural language to and from the languages these computer systems understand. Finally, LLMs have shown a lot promise in linking structured and unstructured data together and applying logical reasoning to help investment professionals make decisions.”

Bloomberg (BloombergGPT), trading venue LTX (BondGPT) and APEX E3 are among the leaders in launching AI tools to exploit LLM. Open AI’s tool GPT-was released in 2020 and had 175 billion parameters which researchers at Bloomberg noted in a paper ‘BloombergGPT: A Large Language Model for Finance’ was “a hundredfold increase over the previous GPT-2 model, and did remarkably well across a wide range of now popular LLM tasks, including reading comprehension, open-ended question answering, and code generation.”

This successful use of natural language processing, replicated via LLM, has found that greater scale increases capabilities such as improving performance in ways that were not seen in smaller models, and consequently increases the models’ learning capability, making them more viable for complex taxonomies. They can take single prompts from the user with a few examples for the model to learn from, and quickly pick up on what is required of them.

“What you get first and foremost with a large language model, is something that is already trained to understand common language,” says Jim Kwiatkowski, CEO of bond trading venue LTX, which launched its BondGPT app this year. “That’s a significant undertaking and a significant usability enhancement. Where legacy systems typically employ point-and-click menus, and you need to work through those menus to find information, LLM allows you to simply type in the question that you want the answer to. Much of the work that we’ve had to do with large language models has been looking at the starting point, at the use case for customers who we’re trying to serve and the gap that existed.”

LTX’s BondGPT allows users to find bonds on the LTX network – and outside through natural language queries, reducing the time needed for onboarding users and potentially supporting liquidity and price formation efficiency.

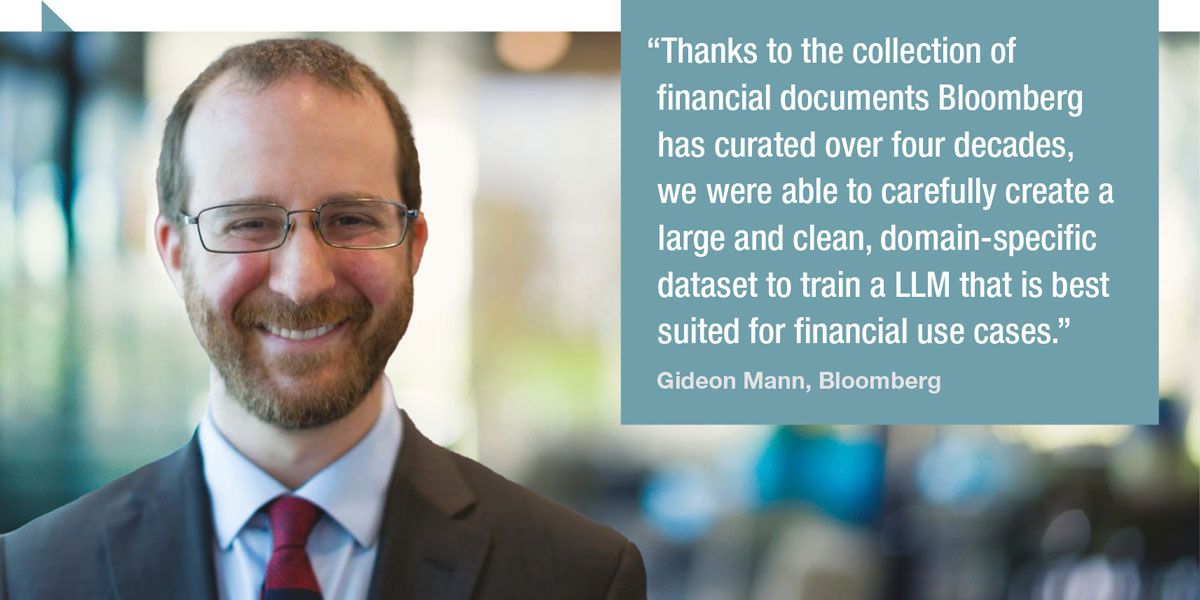

Gideon Mann, head of Bloomberg’s ML Product and Research team said, “Thanks to the collection of financial documents Bloomberg has curated over four decades, we were able to carefully create a large and clean, domain-specific dataset to train a LLM that is best suited for financial use cases. We’re excited to use BloombergGPT to improve existing NLP workflows, while also imagining new ways to put this model to work to delight our customers.”

APEX E3’s ALICE tool allows users to rapidly process huge sets of documents, spreadsheets and content to make natural language queries in order to reduce the workload of extracting key points.

At each point these tools are replacing conventional interfaces with natural language search and making the extract, transform, load (ETL) process that is key to data mining processes far simpler to manage.

I’m sorry Dave..

There would be some serious issues with using ChatGPT directly for capital markets work. Chat GPT is designed to provide an answer to the user and can ‘hallucinate’ by making up fictional answers designed to deliver what was asked, making its work unreliable. Its information is a year out of date, typically, and is subject to the biases of the internet material it has consumed.

Different tasks have different levels of challenges. For example, named entity recognition can be particularly challenging in markets in which codes and acronyms are often used to refer to companies.

“A question could reference bond market jargon or an abbreviation such as a ticker symbol, so the model needs to understand what a given string of characters means,” says Kwiatkowski. “What if there’s a common word that could also be a named entity? Should the large language model react to it as a word or understand that it’s a named entity? There’s so much to be assumed about context. In our market, traders expect to be able to speak the minimum required to be understood, so much of what we’ve done has been to make a conversational interface and a model that understands bond market vernacular. There’s an expectation that the software understands the context and will understand what was meant by the user.”

That has made the development of specially trading LLM systems crucial in order to ensure reliability. Knowing how the technology can support traders, but also understanding its limitations, will also be invaluable for heads of desk as they seek to support the roll out and testing of new systems.

Banks have to wary of regulators when developing such tools themselves as anything fitting into the ‘artificial intelligence’ world can raise concerns amongst authorities keen to avoid automation of responsibility. No-one in the markets should forget Knight Capital’s near bankruptcy in 2011 when it lost US$460 million in 45 minutes due to the parameters of a trading algorithm being incorrectly configured.

Eric Heleine, head of trading desk & overlay management at Groupama Asset Management, who took certification in Prompt Engineering for ChatGPT from Vanderbilt University, says, “We are at the start of the evolutionary process, and the duration of the change could be very short, so we need to understand at least the minimum in order to get a perspective on the impact this might have upon industry. That is particularly true in finance, where analysing and forecasting requires us to process large data sets. This technology is helping to distil that information; it also helps to explore a single concept in great depth. The time between having an idea, and turning that idea into a book, is shrinking rapidly.”

The future potential

The tools developed do have potential challenges so developers must be aware of, and mitigate, those risks.

“Can an LLM be gamed if you use an LLM out of the box?” asked Kwiatkowski. “Yes, so the company that chooses to use a commercially available LLM for a commercial purpose needs to take on the responsibility of ensuring that they’re not gamed, understanding the use case, understanding the users. You can put safeguards in place but these come at the expense of speed. In our case, we’re a broker dealer. So we ensure that every answer that comes out of BondGPT is monitored by an AI-powered compliance rules engine which evaluates the response based on a number of compliance rules, including avoiding responses that contain investment advice.”

However, he notes that the system’s ability to respond around the 20-second mark appears to break threshold of sorts for users in their demand for functionality.

Even if the evolutionary cycle is short as Heleine notes, there are still many use cases to assess for this new technology and big players are putting money into the research and development necessary to drive that evolution forward.

The Bloomberg team says, “We have several interesting directions to pursue. First, task fine-tuning has yielded significant improvements in LLMs, and we plan to consider what unique opportunities exist for model alignment in the financial domain. Second, by training on data in [proprietary high-quality dataset] FinPile, we are selecting data that may exhibit less toxic and biased language. The effects of this on the final model are unknown as yet, which we plan to test. Third, we seek to understand how our tokenisation [which transforms the text into a format suitable for the language model] strategy changes the resulting model.”

©Markets Media Europe 2023

©Markets Media Europe 2025